This is just a collection of things I found and thought were interesting while playing with the idea of 360 panoramic views in Unity.

Youtube has support for panoramic video, and I figured that would be a good way to see what mappings are widely supported. They don’t outright specify it, though from looking at the videos that are up, they look like an equirectangular projection

I’ve implemented something in Unity that works with those videos. Essentially it comes down to putting a sphere around the Unity camera that has UV mapping to match that projection. For some testing I used an image from here. I also tried out the video here.

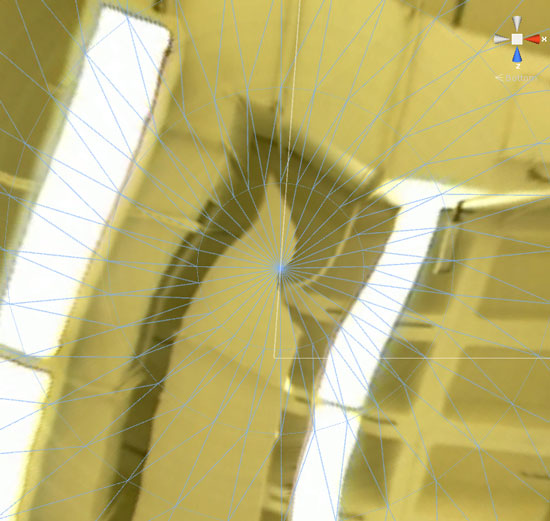

One thing I used is from the Unity Asset store Project UVs from Camera. It can project flat or it can project as a sphere. I think it has its uses, though it ran into limitations with the sphere going entirely around the camera. There would be a vertical stripe where the UVs reached one edge of the map and wrapped around to the other, kind of like this:

Essentially, the problem is it’s calculating the UV per vertex, but one row of triangles ends up with vertices that wrap from 360 back to 0, so they span the whole texture backwards. I wrote my own UV sphere projecting script, where I looped through each triangle, and if a triangle had one vertex on one far side and two on the other, it would shift the one over.

I ended up using this Youtube tutorial as the basis for unwrapping the sphere in Blender. It uses as the applied texture a Mercator-projection map of the world, which would be the same kind of mapping we’re looking for. A good point there is that he marks a vertical seam on the sphere, which fixes the wrapping problem I mentioned.

That tutorial was linked from this demonstration of Unity + Oculus Rift + 360 panorama video on Vimeo. The creator of that followed up with his own tutorial. He gets at pretty much what I was doing. He UV maps a sphere, places the viewer in the center and plays the video on it. Exactly how to map the sphere was the crucial part to me.

The down-side is these still have some distortion on the two poles of the sphere. This might be inherent to using the equirectangular projection. I think that projection is popular because it still looks but it’s kind of a compromise if you just want something to use in-game.

I could just make up any arbitrary projection and use that, but I want a way to produce the video for it, so finding established standards would be preferable. One stitching software for creating panorama video is called Autopano and lists the supported projections here. Hammer projection and “little planet” might be nice alternatives if I want at least one pole not distorted.

John Carmack mentioned cube map support for Gear VR. Autopano doesn’t seem to list that as an option, but according to this tutorial, software called Pano2VR includes some cube-based formats.

I also used this post as an example to render a panoramic image in Blender, and using that as the sphere texture seems to work out.

This is slightly on a tangent, but this is a camera on the Unity Asset Store that renders a 360 view from within Unity. You could potentially render that to a texture on the sphere and have a sort of crystal ball. I haven’t tried it yet, but it’s interesting.